Hired! – Beware of biases

By Gathri Gopalakrishnan

Diversity means different things to different people. There are several dimensions of diversity – age, gender, religion, disability etc. In a short survey of employees from two companies in India (Jubilant and CYC), it was found that “Recruitment” was considered to be the top priority in terms of diversity and inclusion initiatives irrespective of the dimensions of diversity. Thus the immense burden of ensuring diversity across several dimensions falls on the recruitment officials of the company. According to TheLadders research, recruiters spend an average of 6 second before they make a decision. This implies that they often rely on instinct or ‘gut feeling’ when it comes to choosing a candidate. While their instincts are a useful tool in decision making, it brings with it the dangers of unconscious bias.

Unconscious bias is a psychological phenomenon where our brains perception of certain people is skewed based on our past knowledge and experiences. It’s not that we are either good or bad people in the way we judge others, it’s just that our brain has to process so much information that it has evolved mechanisms to make things easy in processing information. But the problem is that it may not always help us make the right decisions. If you are curious, feel free to take a test that helps identify unconscious biases at https://implicit.harvard.edu/implicit/takeatest.html . I tried the test on skin-tone bias and was surprised to see that I was slightly biased in favor of lighter skin tones. Despite being Indian (skin tone- brown), my brain seems to have leaned in favor of a lighter skin tone. I have noooo idea why! And I promise to work on fixing this bias! My unconscious bias probably has no significant impact on humanity, but imagine the compound effect of such a bias among recruiters and employees across the globe. When Google released their first diversity report in 2014, it was a wakeup call for companies to get to the root of this diversity gap. Soon Google realized that unconscious bias was a major hurdle in their diversity journey. Ever since, Google and several other companies have been trying to educate individuals about their unconscious biases. Take a look at Google’s unconscious bias training workshop here (https://rework.withgoogle.com/guides/unbiasing-raise-awareness/steps/watch-unconscious-bias-at-work/)

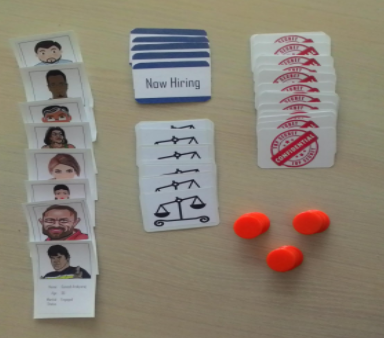

‘Hired!’ Is a card game that addresses biases in recruitment. The players represent the hiring committee of a company and must try to balance their interests and the company’s overall interest. Each player is assigned a particular bias at the beginning of the game that affects his/her perception of the candidates. The game puts the players in a safe environment where calling out each other’s biases is acceptable and rewarding. It gives the players an opportunity to understand how a biased decision can affect the company as a whole. The following sections are an account of the evolution of Hired!

The Beginning

Hired was proposed by Jesse at one of the brain storming sessions. The idea was to build a game where players had a particular bias and the others had to watch out for this biased behavior. The link with recruitment was made almost instantly as it seemed like the most impactful situation where a person’s bias can have a big impact. To get a more concrete understanding of how it would work, we decided to try out a paper version of the game. At this point we had just biases and candidates. We realized even before we started that there should be a mechanism which determines the actual worth of the candidates. And thus we had a deck of cards (sheets of paper of course!) that would represent how good the candidate actually was. Each card had a number from 1 to 4, 4 being the highest. Each player would pick up a scoring card for each candidate (not revealed to the other players) and the sum of all scores was the actual worth of the candidate. The decision on if a candidate should be hired or not was based on a discussion followed by a simple majority wins rule.

At this stage, the score was computed as the sum of individual scores of all candidates who were hired.

The Loopholes & Fixes

After the first round of gameplay, the most important thing that we noticed was that the arguments were poorly structured and players often ended up contradicting each other. Thus, we realized that there should be more solid information d on which players argue. This information had to be specific and binary. By binary, we mean that a characteristic had to be good or bad, as gray areas often lead to confusion and deadlocks. So for the next version of the game, we added specific information to the scoring card and the scores were either 1 or 4. These numbers were later changed to 0 and 1 to make the math easier 😛

Despite the clearly defined information, we noticed that some arguments led to a stalemate as the players were not clear about what role they were hiring for. For example: If a candidate had a visual impairment, one player may argue that it is impossible for this candidate to serve as a designer while the other may argue that the candidate is adept at sales or public speaking. This led to the addition of a new category of cards- the job description cards. These cards define what role this candidate is being hired for.

So far, the simple voting system helped in reaching a quick decision. However, there were 2 problems that we could foresee. One, if the number of players were even, it could lead to a deadlock. Two, the player on the minority side did not have a fighting chance thus making the game a little unfair. To fix this, we came up with a betting system. Each player would start out with a given number of coins and can bet in favor of a candidate. Although the betting worked in theory, it didn’t fit the spirit of the game. After briefly considering a shared resource model, we settled on a voting token system. Each player had a certain number of voting tokens and they could use it to skew the decision in their favor. The voting system has stayed since then.

Now that the voting system was clear, there were new problems! One problem that immediately needed fixing was that players needed more incentive to guess others’ biases. Without this incentive, the players were rarely tempted to guess. This problem was easily fixed by introducing a voting token based incentive for guessing. If A guesses B’s bias and is right, A gets one of B’s tokens. However, if he is wrong, he has to give B one of his tokens. This system encouraged people to guess others’ biases while ensuring that none of the players are at a disadvantage.

After a lot of internal testing, it was time to test the game with players other than the designers! We made some nice and fancy cards to play with and were good to go.

Play testing

The game was play tested 4 times with gamelabers who were not part of the game design. It was amazing to see interesting strategies evolve. One player decided to play in favor of a candidate he was biased against to ensure that his bias isn’t guessed. Another player used his minority position to mislead the others. The feedback from the playtests helped us fine tune the voting system.

The scoring was another area that underwent changes. The initial idea was to use the sum of all hired candidates’ scores as the company score. However, when multiple teams were competing simultaneously, we needed a system that was not affected by chance. Thus, a rank based system was decided. Now the objective was just to see if the company hired the best candidates who were available. The impact of hiring someone with a bias also had to have a bigger impact on the individual. So in the final version, individual score is calculated as number of tokens in the end minus twice the number of candidates against whom the player is biased.